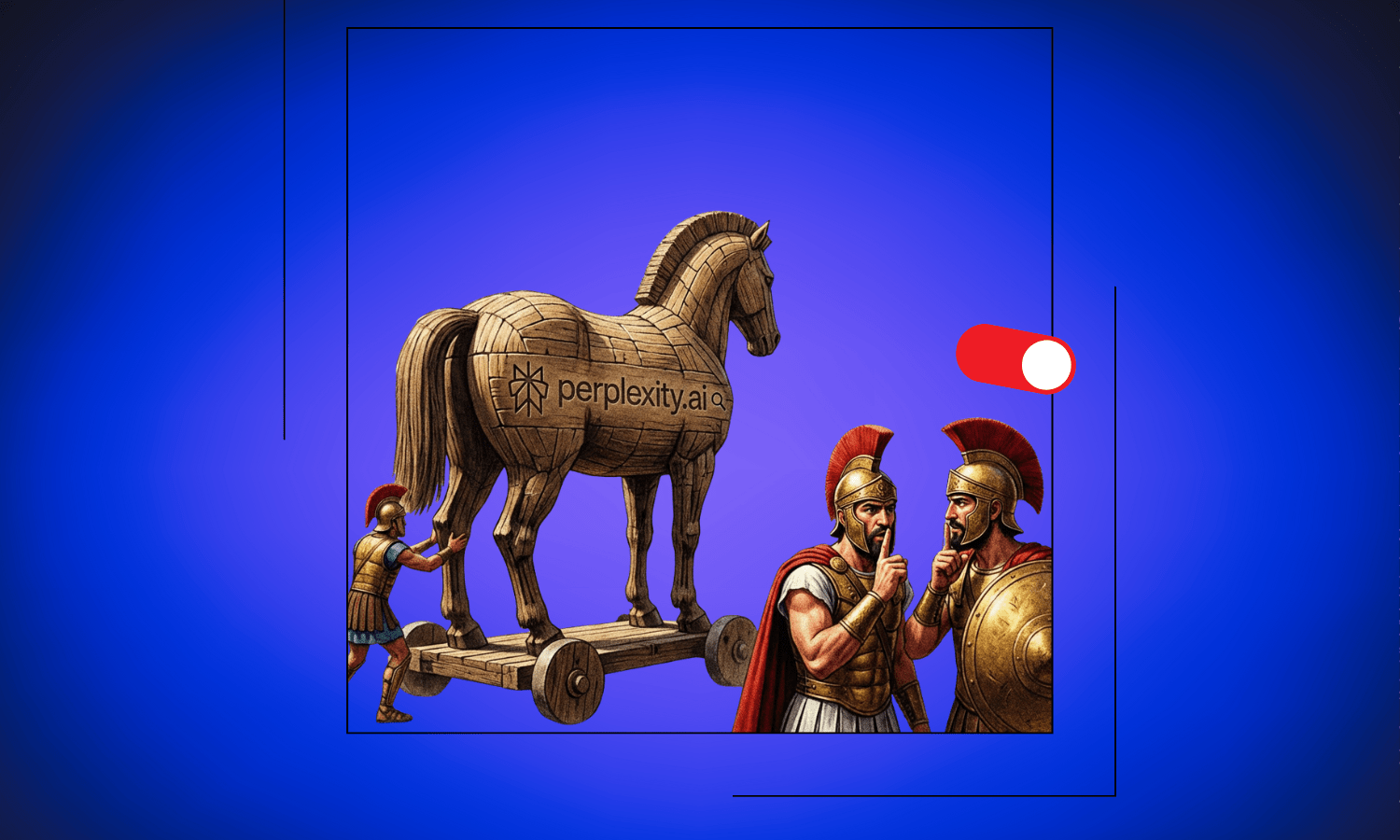

Perplexity PRO gift from telecom providers is a Trojan horse disguised as a free AI assistant – Here's how to protect yourself immediately

Millions accepted this free Perplexity PRO gift from their carriers—and unknowingly fed their most sensitive secrets into an AI training machine

The Perplexity “Free” Upgrade Risk Quick overview

If you’re one of millions who recently scored a free Perplexity PRO subscription through your telecom provider, congratulations. You’ve just become part of one of AI’s most aggressive data collection campaigns. While carriers like Airtel (India), Deutsche Telekom (Germany), Telefónica (Spain), Bouygues Telecom (France), SK Telecom (South Korea), Taiwan Mobile (Taiwan), Singtel (Singapore), Tango (Luxembourg) and others are promoting this as a premium perk, there’s a hidden catch: By default, your most private thoughts are being used to train Perplexity’s AI models.

Perplexity isn’t just expanding. It’s systematically embedding itself into telecom infrastructure across continents. From Airtel in India to Tango in Luxembourg, Telefónica in Spain to Taiwan Mobile, the company has struck partnerships with carriers serving hundreds of millions of users.

The pitch sounds great: free PRO features including unlimited searches, advanced AI models, and image generation.

But here’s what they don’t advertise in bold letters. Every query you make, every business idea you brainstorm, every sensitive question you ask is feeding their training pipeline by default. This isn’t an oversight. It’s the business model.

The Global Data Harvesting Strategy

These partnerships allow Perplexity to bypass traditional user acquisition costs. Instead of convincing you to sign up, they are bundled into the service you already pay for.

The result? A massive influx of user data. Think about what you’re actually sharing with an AI assistant:

- Business strategies

- Health concerns

- Financial planning

- Personal relationships

- Career anxieties

These aren’t casual web searches. They’re your unfiltered thoughts, often more revealing than what you’d share with close friends. Handing this data to a company that defaults to training on it shows where their priorities lie.

Here’s how to prevent your chats from being used for training: The hidden switch

When you activate your “free” PRO subscription, Perplexity quietly enables AI data training. No prominent warning, no consent dialog, just a buried setting that assumes you’re comfortable with your private conversations becoming training data.

Here is how you protect your future chats in Perplexity:

- Navigate to perplexity.ai and click your profile image

- Select Preferences from the menu

- Find AI data retention in the settings

- Toggle this to OFF immediately. The slider should point to the left and appear grey.

This should be the default. The fact that it’s not tells you everything about how Perplexity views user privacy versus data acquisition.

Why “Opting Out” Isn’t Enough

Even after disabling data training, trusting Perplexity with genuinely sensitive information is risky. The company has already shown that growth and data collection matter more than privacy-first principles. Their partnership strategy speaks volumes. They’re spreading through telecom providers who can bundle the service without users actively choosing it. This approach prioritizes scale and data volume above everything else.

The opt-out toggle provides legal cover, not genuine privacy protection. Once you’ve shared sensitive information, you can’t un-share it. You can’t verify what’s already been ingested into training pipelines before you discovered that setting. And who knows if they still respect the toggle.

Perplexity is known to be far from privacy-respecting

We’ve already exposed their past mess-ups—in fact, we were the first to uncover a critical privacy flaw where user-uploaded images were stored publicly for anyone to see. They fixed that specific issue, but they also remain heavily dependent on Big Tech providers like Amazon, Cloudflare and Google at the same time.

For example, when you ask a question, the site fetches favicons via Google—sending your device info and IP address straight to Mountain View. They also rely entirely on Amazon Web Services. If AWS goes down, Perplexity goes down. If Cloudflare goes down, guess what? Perplexity goes down too.

It’s not just about reliability; it’s about privacy. Perplexity shares hundreds of data points about you (like your IP address, device type, browser version, and more) with these third parties constantly. It’s actually hard to believe that billions of dollars are flowing into a company with this kind of setup. Unless, of course, you realize that in the age of AI, your data is worth more than gold.

The Real Cost of “Free”

Perplexity’s telecom partnerships are brilliant from a growth perspective. They’ve found a way to reach massive user bases without traditional marketing costs, positioning their service as a premium benefit. But for users, especially those who don’t discover that data training toggle, the cost is surveillance disguised as assistance.

Your AI assistant should be a tool that amplifies your thinking, not a surveillance mechanism that monetizes it. Before you pour your most sensitive questions into Perplexity, even with training disabled, ask yourself this: do I trust a company that designed this system with data collection as the default?

The Privacy-First Alternative

If you actually value your private thoughts staying private, you need an AI assistant built with privacy as a core principle, not an afterthought hidden in settings. Solutions like CamoCopy prove you don’t have to sacrifice AI capabilities for data protection.

These services deliver the same conversational AI power, the same ability to process complex queries and generate insights, but with a fundamental difference. Your data stays yours. No training pipelines, no corporate analysis of your thought patterns, no risk of your sensitive queries becoming someone else’s dataset.

Important concluding remark

Be sure to share this article with friends or colleagues—because this default data collection setting actually affects all PRO users, including those who pay for PRO, and is not limited to recipients of the mobile carrier’s gift. Show them the steps above so they can protect their chats if they want to continue using Perplexity – or switch directly to CamoCopy if you’re looking for an AI assistant that doesn’t finance itself through your data.