What is Shadow AI? Why it's a big problem, and how to solve it

From ChatGPT to Gemini, AI tools are reshaping work. But at what cost to privacy and security?

What is Shadow AI? Quick definition

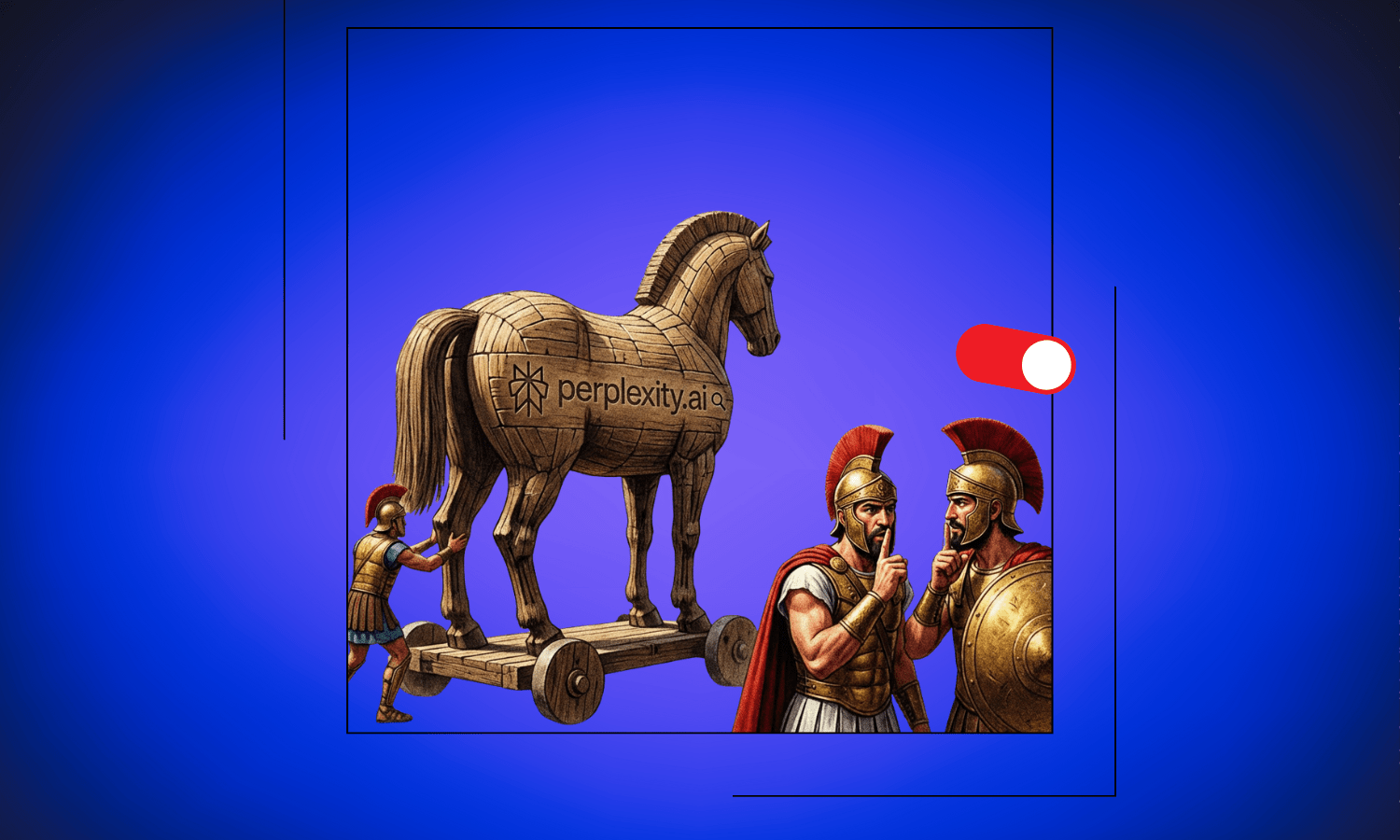

Shadow AI describes the unauthorized use of artificial intelligence tools and services by employees without formal approval, oversight, or knowledge from IT departments or management. Unlike approved enterprise AI systems, shadow AI includes consumer platforms like ChatGPT, Gemini, Claude, and Perplexity that workers use independently to boost productivity, often sharing sensitive company data, trade secrets, and confidential information with third-party AI providers, who may use these conversations to train their models.

The morning starts like any other. Your marketing manager opens a browser tab and types a question into ChatGPT about positioning strategy for your upcoming product launch. Your developer pastes code into Claude to debug a tricky problem. Your sales rep asks Gemini to help draft a proposal for your biggest prospect. By lunchtime, your finance team has uploaded this quarter’s projections to an AI tool to analyze trends.

None of them asked permission. None of them considered where that data goes. And none of them realize they just gave your company’s competitive intelligence to systems designed to learn from every interaction.

Welcome to shadow AI, the phenomenon that’s reshaping how work gets done while creating security blind spots that most organizations don’t even know exist. The good news? There’s a clear path forward that doesn’t require choosing between productivity and privacy, a solution we’ll explore at the end of this article.

What Shadow AI Actually Means

Shadow AI refers to the unauthorized use of artificial intelligence tools and services by employees without formal approval, oversight, or knowledge from IT departments or management. Think of it as the AI equivalent of shadow IT, where workers adopt cloud services and software without going through official channels. The difference? Shadow AI isn’t just about using unapproved software. It’s about feeding potentially sensitive information into systems that are actively learning from your inputs.

The numbers tell a compelling story. Recent studies show that while most knowledge workers now use AI tools regularly to boost their productivity, 70% actively hide this usage from their employers. They’re not trying to be malicious. They’re trying to be efficient. These tools are free, powerful, and readily accessible. Why wait for corporate approval when ChatGPT can help you write that email right now?

But this convenience comes with invisible strings attached.

The Race to AGI and Why Your Data Matters

To understand why shadow AI poses such a significant risk, you need to understand the ultimate goal driving companies like OpenAI, Google, Anthropic, and others: Artificial General Intelligence, or AGI. AGI refers to AI systems that can understand, learn, and apply knowledge across any domain at or above human level. Unlike today’s narrow AI that excels at specific tasks, AGI would possess general cognitive abilities rivaling human intelligence.

Reaching AGI requires massive amounts of diverse, high-quality data. Every conversation you have with ChatGPT, every document you upload to Gemini, every brainstorming session you conduct with Claude becomes potential training material. These companies aren’t being secretive about this. Their terms of service often explicitly state that your interactions may be used to improve their models.

Here’s where it gets concerning. As AI becomes more capable, users find increasingly sophisticated ways to use these tools. You start with simple questions, then move to complex analysis, strategic planning, code generation, and creative problem-solving. Each evolution creates new training data that makes the next generation of AI even more capable, which encourages even more complex usage. It’s a self-reinforcing cycle that shows no signs of slowing down.

For AI companies racing to AGI, your interactions aren’t just customer service data. They’re the fuel for the next breakthrough. The more diverse and sophisticated the input, the more valuable it becomes. And right now, millions of workers are providing that fuel without anyone keeping track.

The Corporate Risk Nobody’s Talking About

For individual users, the privacy implications are significant. Your personal thoughts, creative ideas, and even casual conversations become part of a training dataset that exists far beyond your control. But for businesses, shadow AI represents an existential threat that most leadership teams haven’t fully grasped.

Consider what employees typically use AI tools for. They’re not just asking about the weather. They’re:

- Brainstorming product strategies

- Analyzing competitive intelligence

- Debugging proprietary code

- Drafting client proposals

- Working through financial projections

- Solving complex business problems

In other words, they’re sharing exactly the kind of information that gives your company its competitive edge.

When your developer pastes proprietary code into ChatGPT to debug it, that code could theoretically be used to train future models. When your marketing team discusses unreleased product features with an AI assistant, those strategies become part of a vast dataset. When your finance team uploads internal documents for analysis, you’ve essentially handed your numbers to a third party with terms of service that prioritize their AI development goals.

The irony is sharp. The same tools employees use to work more efficiently and gain competitive advantage could be gradually eroding the very advantages they’re trying to amplify. Your innovation today becomes everyone’s baseline tomorrow when it filters into the next model update.

Why Employees Hide Their AI Usage

Understanding why 70% of workers hide their AI usage reveals the depth of this challenge. It’s not just about breaking rules. It’s about a fundamental disconnect between how work actually gets done and how organizations think it gets done.

Social stigma plays a major role. Many employees worry they’ll be seen as less competent if they admit to using AI assistance. There’s a perception that “real” work means doing everything yourself, that using AI is somehow cheating or taking shortcuts. Some fear their jobs might be at risk if management realizes AI can do parts of their role. Others simply don’t want to deal with bureaucratic approval processes when they can access tools instantly on their own.

The Vicious Cycle

This creates a vicious cycle:

- Because employees hide their usage, companies don’t develop clear policies

- Because there are no clear policies, employees don’t know what’s acceptable

- Because they don’t know what’s acceptable, they default to hiding their usage

- And all the while, sensitive data flows freely into systems designed to learn from it

The Privacy Problem Gets Bigger Over Time

The long-term privacy implications extend beyond immediate data leakage. Once information enters these training datasets, it becomes nearly impossible to remove. Even if a company later realizes the risk and implements policies, the data from months or years of shadow AI usage already exists somewhere in the training corpus of major AI models.

Consider the trajectory. As models become more sophisticated, they develop longer context windows and better memory systems. Future versions might be able to recall and synthesize information across millions of training examples. The strategic discussion your team had about market positioning in early 2024 could theoretically inform responses about industry trends years later. Your proprietary algorithm from 2025 might influence code suggestions in 2027.

The companies building these models have every incentive to collect as much data as possible. Training data is the most valuable resource in the race to AGI, and they’re getting it for free every time someone types a query or uploads a document. They’re not doing anything illegal. Users agree to terms of service when they create accounts. But most users never read those terms, and they certainly don’t consider the long-term implications for data that feels conversational and temporary but becomes permanent training material.

What This Means for Different Stakeholders

For Individual Professionals

Shadow AI usage creates personal privacy risks. Your career aspirations, financial concerns, health questions, and creative ideas all become data points. Your writing style, problem-solving approaches, and thought patterns get analyzed and incorporated into systems you have no control over.

For Small Businesses and Startups

The risks are particularly acute. These organizations often operate on razor-thin advantages. A novel approach to customer service, an innovative product feature, or a unique market insight might be their only edge against larger competitors. Losing that through gradual data leakage could be devastating, and they might never even know it happened.

For Enterprises

Shadow AI represents both a governance failure and a security vulnerability. It creates blind spots in data loss prevention systems, bypasses information security protocols, and makes a mockery of careful compliance frameworks. Regulated industries face additional concerns, as employees might inadvertently share information subject to HIPAA, GDPR, or other privacy regulations with systems that operate under different jurisdictions and legal frameworks.

The Path Forward

Solving the shadow AI challenge requires moving beyond simple prohibition. Banning AI tools won’t work when they’re this useful and accessible. Employees will find ways around restrictions, driving the problem even further underground. Instead, organizations need to open the doors to privacy-friendly alternatives that give employees the AI capabilities they need without the data collection risks.

Key Strategies

1. Provide Approved Alternatives The smartest approach is to provide approved alternatives that respect privacy by default. Solutions like CamoCopy Enterprise make it simpler for employees to use sanctioned AI tools rather than finding workarounds to access banned systems. When the easiest path is also the safest path, shadow AI naturally diminishes.

2. Education Most employees genuinely don’t understand where their data goes or how AI training works. They see these tools as simple question-and-answer systems, not as sophisticated data collection mechanisms feeding the race to AGI. Clear communication about risks helps workers make informed decisions.

3. Clear Policies Employees need to know what’s acceptable, what’s forbidden, and why. Gray areas breed shadow usage. Specific guidance about different types of information and appropriate use cases gives people guardrails they can actually follow.

4. Ongoing Dialogue AI capabilities evolve rapidly. Policies from six months ago might not address current risks or opportunities. Regular conversations about AI usage, risks, and benefits reduce the stigma that drives secretive behavior.

The Privacy-First Alternative

The fundamental problem with shadow AI isn’t that employees want to be more productive. It’s that the most accessible AI tools prioritize their own advancement over user privacy. As long as the easiest option is also the riskiest option, shadow AI will persist.

This is where privacy-first AI assistants enter the picture. Tools that explicitly commit to never training on user data, that operate under strong privacy frameworks, and that put user control first offer a way out of the shadow AI trap. Privacy-focused alternatives like CamoCopy have pioneered this approach since late 2022, demonstrating that powerful AI assistance and strong privacy protections aren’t mutually exclusive.

The technology exists. The alternative exists. What’s needed now is awareness that shadow AI isn’t just an IT policy issue but a fundamental question about data ownership, competitive advantage, and the future of work in an AI-driven world.

The Choice Is Yours

If your team is already using AI tools, and statistically speaking they almost certainly are, the question isn’t whether to engage with AI. The question is whether that engagement happens in the shadows, feeding the race to AGI with your most sensitive information, or in the light, with tools designed to respect your privacy while delivering the capabilities you need.

For organizations and individuals ready to embrace AI without sacrificing privacy, CamoCopy offers a clear alternative built on privacy by default. Unlike mainstream AI platforms, CamoCopy:

- Never trains on your conversations

- Encrypts your documents and images

- Puts your data security first rather than treating your interactions as raw material for model improvement

The choice is yours, but the clock is ticking while shadow AI continues its invisible expansion.